Primer: To understand Blockchain Scalability, we must first understand Blockchain. Put simply, a blockchain is a distributed digital database that facilitates transactions by enabling an immutable, transparent, and shared ledger. This ledger is designed to achieve decentralized transaction management. Any member node can autonomously administer and manage transactions by complying with the agreements/rules laid out and without any third-party interference. Consensus on data accuracy is achieved by a network of distributed users (nodes). Upon verification, transactions are recorded permanently, delivering immutability. These transactions are recorded in blocks. When a block is filled, a new block is generated and chained onto the previous block. The blocks are inextricably linked together in chronological order forming a chain, hence the blockchain.

Broadly, scalability refers to a system’s ability to adapt to increased workload in an efficient and swift manner. Scalability, as it pertains to Blockchain, refers to the network’s ability to sustain an increasing number of transactions as well as nodes and is primarily determined by throughput (transaction rate), cost and capacity, and networking. Thus, scalability is a cohesion of several parameters and metrics that we shall now delve into. It is essential to note in blockchain terminology, scalable is a comparative term and must not be confused with scalability.

Throughput

Is defined by the time required for committing a valid transaction on the blockchain and the size of the block for the transaction. For perspective, traditional institutions like Visa with centralized infrastructure can process 1700 transactions per second (TPS) whereas Bitcoin can process only 7 transactions per second. The vast difference stems from Bitcoin’s utilization of excessive processing power and time to achieve decentralization and privacy for its users. Transactions on decentralized networks need to go through several steps comprising acceptance, mining, distribution, and eventually validation by a global network of nodes.

An increase in the number of transactions results in an increase in the size of the block, which has its own set of problems. Theoretically, increasing the block size should help enhance network speed and capacity; however, a larger block size adds to the cost as it increases the storage requirement and involves higher power usage. This adds to the burden of maintaining a full node, forcing out nodes that can’t afford the processing requirements. Moreover, an increased block size becomes difficult to relay around the network.

Transaction delays affect scalability, and transaction fees play a significant role in this. Verification of transactions on the blockchain requires users to pay a specific fee. This fee has a direct impact on the confirmation time as it determines which transaction will be chosen for processing. With a growing number of transactions and the need to establish trust between anonymous entities, validating transactions is time-consuming, and priority is given to users willing to pay a hefty fee leaving several transactions unprocessed and in the queue for a long time.

Cost and Capacity

From the genesis block to the most recent transaction, all data is stored on the blockchain. However, nodes have limited capacity for storage, and if the blockchain grows rapidly, the storage requirement increases substantially, making it difficult for nodes to store massive amounts of information. In addition, the transaction processing power of blockchains is limited, and its underlying structure impedes scalability. There is a reason these blockchains have limited capacity, caps are placed to prevent inconvenience and inefficiency in management. If blockchains were permitted to grow in an uncontrollable manner, the nodes would not have been able to keep up.

Networking

Every new transaction on the blockchain needs to be broadcast to all nodes involved. As the number of nodes increases, the time required for a transaction to be propagated increases, degrading the overall performance. Moreover, anytime a new block is mined, it is revealed to all nodes. Thus, the consumption of network resources is significantly high, necessitating efficient data transmission coupled with a dedicated network bandwidth to minimize delays and improve throughput.

With characteristics and benefits such as transparency, trust, immutability, and data security, blockchain technology offers a world of opportunities extending beyond the financial sector. Its inherent security mechanism and public ledger system have found use in non-financial applications drawing a swath of users. Despite the associated benefits and use cases, scalability is a major challenge affecting blockchain development and widespread adoption. The network settlement times and usability of centralized entities are far superior to decentralized entities. The unsolved problem of scalability is a major stumbling block in blockchain adoption. With the number of users on the network increasing, the high transaction fees, slow transaction speeds, and unsatisfactory user experience prevent blockchain technology from realizing its full potential.

One would assume a change in the blockchain parameters, such as increasing the block size and reducing the block generation time, would help tackle the problem of scalability. More space implies more transactions; however, the block size can’t scale infinitely, and its current ability to scale doesn’t enable it to match or compete with existing centralized systems. Moreover, these changes require costly hardware for nodes to remain in sync on the network, proving rather expensive. The root of the problem is in the very design of the network. Many constraints that hinder scalability are packaged with blockchain value propositions.

In the following section, we shall explore the various Blockchain scalability solutions, but before we do that, it is imperative to understand the term – Blockchain Trilemma.

The Blockchain Trilemma

The Blockchain Trilemma is a widely held belief popularized by Vitalik Buterin (Co-Founder of Ethereum) that highlights the three essential and organic properties of blockchain – decentralization, security, and scalability cannot perfectly co-exist, implying any network can achieve only two of the three aforementioned properties. Thus, greater scalability can be achieved, but security or decentralization will have to be compromised. Historically, blockchains have prioritized decentralization over the other two properties, which is reflected in the low transaction volumes. However, achieving greater scalability is essential for decentralized networks to compete with high-performance legacy platforms fairly.

With blockchain technology being deployed across various industries, from finance to art, it is of paramount importance to address the issue of scalability without compromising decentralization and security. The scaling solutions can be categorized as Layer 1 andLayer 2 Solutions.

Layer 1 network is the foundational layer, essentially the blockchain itself, whereas a Layer 2 protocol operates on top of an underlying blockchain to improve its efficiency and scalability. Examples of Layer 1 networks include Bitcoin, Ethereum, Cosmos, Solana, ICP, and Avalanche.

Blockchain Scalability Solutions

Layer 1 (On-Chain) Solutions

Layer 1 solutions optimize performance and improve scalability by making structural and fundamental changes to the base protocol itself. Since the changes are incorporated onto the chain, these solutions are often referred to as On-Chain Solutions.

L1 solutions are designed to enhance a network’s attributes and characteristics in order to accommodate more users and data. Changes to the protocol’s rules are made to increase transaction speed and capacity. These changes involve accelerating the speed of block confirmation as well as increasing the amount of data being stored in each block, all directed at enhancing overall network throughput. Other changes that networks resort to in order to achieve scalability include sharding and consensus protocol improvements.

Sharding

Adapted from distributed databases, sharding implies partitioning of the database into smaller data sets or “shards”. Deploying this technique enables the computational workload to be spread across a peer-to-peer (P2P) network reducing the processing time significantly, as each shard is responsible for processing only a portion of the data stored on the network. Sharding enables more nodes to be connected and disperses data storage across the network, enhancing overall efficiency and management. The shards are processed simultaneously in parallel instead of a network having to operate sequentially on each transaction. Thus, sharding contributes to greater efficiency (computation, communication, and storage) as well as higher network participation. Theoretically, there is no cap on the number of shards, and with the addition of shards on-demand, a blockchain should be able to scale infinitely. However, practically this isn’t possible owing to tight coupling and other limitations such as shard takeovers or collusion between shards. The damage from one shard being corrupt and taking over another can be catastrophic, leading to permanent loss of the corresponding portion of data. Furthermore, the probability of corrupt information being injected and broadcast to the leading network through a malicious node cannot be ignored.

Proper implementation of inter-shard communication is essential for users and applications of one subdomain to communicate with another subdomain. Each shard appears as a separate blockchain network necessitating this requirement, and improper implementation of the same can jeopardize the security of the entire network owing to double-spending.

Consensus Protocol

The consensus protocol is an important feature in blockchains and is responsible for maintaining the security and integrity of the network. It refers to a set of rules that control all activity on the blockchain and ensure participating nodes agree on only valid transactions. Some protocols are more efficient than others. Proof of Work (PoW), an extremely secure but resource-intensive protocol, is the consensus mechanism for Bitcoin, Litecoin, and ETH. PoW is time-consuming and utilizes substantial computational power to solve cryptographic puzzles making it slow in comparison to other protocols like Proof-of-Stake (PoS). With PoS, the consensus is distributed over the blockchain network. Validators are picked based on their staking collateral in the network. Ethereum is on track to transition to PoS with Ethereum 2.0. The transition is expected to drastically enhance network capacity enabling faster transactions and lower fees. Examples of other consensus protocols that serve as effective scalability solutions include Proof-of-Authority, Delegated Proof-of-Stake, Byzantine Fault Tolerance, etc.

Layer 2 (Off-Chain) Solutions

An L2 solution is built on top of an existing blockchain with the primary purpose of improving scalability and efficiency. Most prominent examples include Bitcoin Lightning Network and Ethereum Plasma. An L2 solution essentially offloads the transactional burden from the L1 blockchain. The creation of an auxiliary framework enables L2 to operate and process transactions independently off the chain. Thus, L2 solutions manage and handle all the processing load and report to L1 for result finalization. It is for this reason that L2 solutions are also referred to as Off-Chain solutions.

A major benefit associated with L2 frameworks is the ease of deploying the solution. These solutions are integrated as an extra layer on top of the base layer and require no structural or fundamental changes to the mainchain. As a result, L2 solutions inherit the features that make L1 secure and decentralized. A majority of the data processing is delegated to L2, reducing congestion on L1, thereby improving scalability, TPS and lowering transaction fees.

The quest for scalability has led to the emergence of the following L2 solutions.

Nested Blockchains

A nested blockchain (L2) runs atop another blockchain (L1). To put it simply, L1 establishes the parameters for the network and L2 focuses on the executions. On a single mainchain, multiple blockchain levels can be built, with each level operating as a separate blockchain. The Mainchain has a parent-child relationship with the other blockchains. The parent chain controls overall parameters and delegates work to the child chains that process and subsequently, upon completion, return it to the parent chain. Under this model, a hierarchical structure is followed wherein child chains take instructions from the main chain to reduce the network’s load and facilitate fast transactions at lower costs. OMG Plasma Project, built atop Ethereum, is an example of a nested blockchain.

State Channels

A State Channel is designed to facilitate two-way communication between a blockchain and off-chain transactional channels. Think of it as permitting a group of participants to conduct an unlimited number of transactions off-chain instead of on the blockchain. This significantly cuts down the waiting time as validation on state channels doesn’t require the immediate involvement of miners. But instead, it is a network-adjacent resource sealed off via a multi-signature or smart contract mechanism pre-agreed by the participants.

Once the transaction or batch of transactions between the participants on a state channel is completed, the final “state” of the “channel”, as well as the inherent transitions, are recorded on-chain in a new block. Thus, state channels enable fast and low-cost transactions off-chain that are secured permanently on-chain.

State channel transactions are not public. However, participants within a channel can view the transactions giving them considerable privacy. It is important to note only the initial and final state of the transaction is recorded on the main chain. As soon as the participants sign a state update, it is considered final, implying state channels have instant finality. However, it is important to point out state channels work best for applications with a defined set of participants and where participants can be available round the clock.

Examples include Celer, Bitcoin’s Lightning, and Ethereum’s Raiden Network.

Side Chains

Designed to process a large number of transactions, a side chain is a transactional chain that connects to another blockchain via a 2-way peg (2WP). It operates independently and utilizes a distinct consensus protocol that can be optimized for speed and scalability. Utility tokens form an integral part of the data transfer mechanism between the sidechain and mainchain. In this setup, the mainchain’s principal role is to confirm transaction records, maintain overall security and handle disputes.

Sidechains differ from state channels in several ways. Firstly, sidechains maintain a public record of all transactions in the ledger. Secondly, a security breach on the sidechain has no implications on the mainchain or other sidechains, making it extremely useful for experimenting with new protocols or improvements to the mainchain.

However, the initial setup of sidechains entails a significant amount of time and effort. Sidechains do not derive security from the mainchain. Instead, they operate as independent chains that are responsible for their own security, which can be compromised if sidechain validators coordinate to act maliciously or if network power distribution is inappropriate.

Examples of sidechains include Liquid, Loom, and Rootstock.

Rollups

Rollups are L2 solutions that execute transactions outside L1 but post the transaction data on it, retaining L1 security. In essence, the burdensome task of processing transactions is offloaded to L2, and then highly compressed data is rolled up into batches and sent to L1 for storage. This arrangement enables Rollups to provide users with lower gas fees and higher transaction processing capacity whilst retaining L1 security.

Rollups can have different security models. The two most prominent methods include:

Optimistic Rollups– offer significant improvements in scalability because they operate with an optimistic assumption of transaction validity. They employ a waiting period during which transaction validity can be challenged. If fraud is detected, computation is performed otherwise, it is posted onto the ledger. Examples of Optimistic Rollups include Arbitrum and Optimism.

Zero-knowledge Rollups– run computations off-chain to generate validity proof for the whole bundle of transactions. The validity proof is then submitted to the mainchain or base layer. Examples of ZK Rollups include zkSync and Starknet.

Let us understand how different blockchains are using some of these solutions to achieve Scalability.

Bitcoin

Bitcoin and Ethereum are two blockchains where scalability is traded for decentralization and security. Scalability is a constant issue, and Bitcoin has been grappling with it since its inception. Bitcoin started out with low fees and predictable block times, but its success has been its nemesis. Its increased adoption was coupled with high fees and network congestion. The initial solution focused on increasing the block size. The majority of the community didn’t agree with it, leading to the creation of Bitcoin Cash, a Bitcoin fork. The block size was increased to 32 MB for Bitcoin Cash. However, an expansion in block size is a temporary solution as the problem is likely to resurface with an increase in network usage.

Bitcoin then implemented Segregated Witness (SegWit) to improve scalability by changing the way and structure of data storage. Essentially, SegWit helped alter the size of the block by eliminating signature data linked with each transaction freeing up considerable storage space and thereby increasing capacity. However, the upgrade didn’t really solve the issue of scalability. So Bitcoin turned to Lightning Network, an L2 solution. The Lightning Network uses smart contract functionality to establish a payment channel between transacting parties. It boosts speed and reduces cost substantially as payments take place off-chain sans verification from the mainchain. However, to be able to utilize this network, transacting parties need to fulfill certain conditions. It works well for simple payments, and constant improvements and upgrades are being made to enhance the deliverables. If successful, it could garner widespread adoption.

Ethereum, on the other hand, deploys more complex L2 solutions, so let’s look into those.

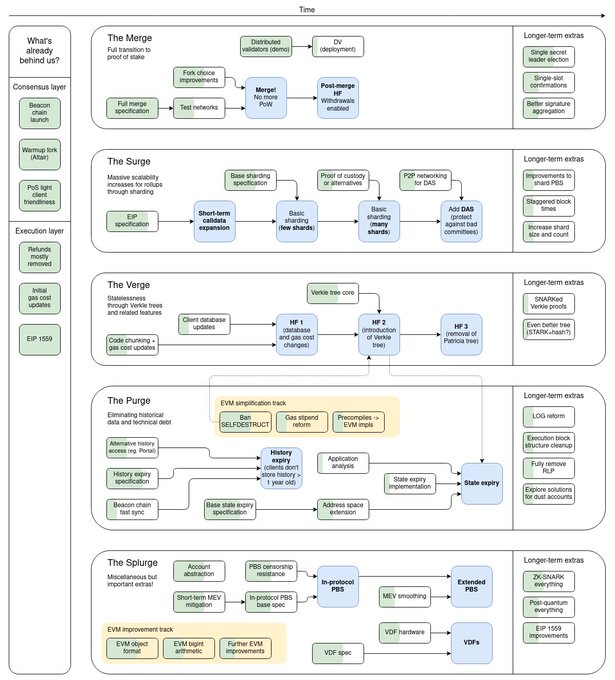

Ethereum 2.0 or Serenity

Designed to ease bottlenecks and enhance efficiency, speed, and scalability, Ethereum 2.0 refers to the network’s transition to a PoS-based system. Ethereum 2.0 shall support sharding and other scalability solutions to increase transaction throughput. The shift involves multiple steps, but the highlight is the migration to a PoS consensus mechanism, a less energy-intensive technology.

As the second-largest blockchain network in the cryptosphere, Ethereum aims to solve the congestion issue by deploying shards. Sharding will enable the network to spread its load and immediately address scalability. The Ethereum shards will be organized around the Beacon Chain (a PoS blockchain launched in December 2020), and each shard will function as a separate chain employing proof-of-stake to operate securely. The shards will coordinate through the Beacon Chain. At present, the Beacon Chain is separated from the Mainnet, but a merger is planned for the future. As per the roadmap, Ethereum is set to launch 64 new shards to help expand network capacity. Each node on Ethereum will store one shard. An important implication of this is to reduce the hardware requirement and ease the ability to run a node so network participation can be enhanced and higher levels of decentralization can be attained. The current plan entails shard usage for aggregation and movement of data, but in time like the Mainnnet, shards will be able to execute code. As mentioned, sharding isn’t risk-free, and Ethereum aims to address the security risk associated with it by randomly assigning nodes to shards and reassigning them at random intervals.

Furthermore, Ethereum developers evade centralization potential and reduced security levels by adopting a mixed approach instead of relying solely on making changes to the base layer (consensus protocol improvements + sharding). They aim to leverage Layer 2 solutions (a combination of sidechains and rollups) to maximize efficiency and scalability. With Eth 2.0 and rollups working in tandem, the transactional capacity of Ethereum is expected to reach 100,000 transactions per second. However, a fully functional version of the Beacon Chain and the accompanying PoS consensus mechanism and sharding chains is unlikely to happen in the near foreseeable future. Furthermore, even a full-scale implementation doesn’t guarantee infinite scalability.

At present, plenty of layer 2 solutions are available for Ethereum that help solve the issue of scalability to some degree. These include:

-Plasma

-Payment Channels

-Sidechains

-ZK-Rollups and Optimistic Rollups

-Layer 1 EVM-compatible blockchains that include Cosmos, Polkadot, and Avalanche

Each solution has its own tradeoffs. Polygon, formerly known as Matic, is worth mentioning. A Plasma-based aggregator, Polygon is a blockchain scalability platform native to Ethereum. Essentially, Polygon operates atop Ethereum and processes batches of transactions on its proprietary PoS blockchain diverting traffic from the Mainnet. In addition to that, Polygon provides a robust framework for building dApps off-chain with enhanced security and speed. Dubbed Ethereum’s internet of blockchains, Polygon aims to create a multichain ecosystem of Ethereum compatible blockchains whilst offering interoperability with other sidechains, sovereign blockchains, and other L2 solutions. However, Polygon faces stiff competition from blockchains such as Avalanche, Cosmos, and Solana that support bridges and permit the trade of several variants of Ethereum tokens.

Polkadot

Founded by Gavin Wood (one of the founders of Ethereum), Polkadot envisions itself as a next-generation blockchain protocol that unites an entire network of purpose-built blockchains, allowing them to operate seamlessly together at scale.

Both Polkadot and Ethereum 2.0 are sharding-based protocols. However, there are certain differences in the way the two protocols approach sharding. Each shard on Ethereum 2.0 will have the same transition function (STF), an interface provision for smart contract execution. This will enable contracts on a single shard to share asynchronous messages with other shards. The Beacon Chain will enable smart contract executions through the Ethereum Wasm interface (eWasm). A different variation of sharding is deployed on Polkadot. Its ecosystem is composed of a Relay Chain (the main chain) that connects and supports all shards that are referred to as parachains on the network. The network currently supports 100 parachains. Each parachain on Polkadot can expose a custom interface. It does not have to rely on a single interface like Ethereum 2.0’s eWasm. Instead, the arrangement permits each parachain to individually connect with the Relay Chain, giving developers flexibility in setting the rules with respect to how each shard changes its state.

Polkadot has managed to establish a decent position for itself as Ethereum struggles with scalability woes. Ethereum, with more than 1 million transactions per day, has a much larger user and developer base than any of its rivals, giving it a competitive edge. However, the delay in the launch of Ethereum 2.0 has allowed various platforms, including Polkadot, to offer additional attributes such as superior interoperability, a key challenge in the world of blockchain.

It’s difficult to determine the future of Ethereum 2.0 or Polkadot especially when other interoperability solutions aim to alleviate dependence on such platforms by spreading the load of computational work across blockchains.

Solana

Solana’s architecture claims to ensure the linear performance of transactions doesn’t deteriorate irrespective of the scale of throughput. Solana employs Proof of History (PoH) as a tool in its Proof of Stake consensus. The novel time-based PoH consensus model with its mechanics for synchronizing time across nodes helps speed up the process of validating transactions. This contributes to Solana’s ability to execute up to 50,000 TPS. Solana supports parallel validation and execution of transactions, but the technique that genuinely enables it to optimize transaction validation is Pipelining. Pipelining essentially helps streamline the transaction validation process, making Solana an ultrafast and scalable blockchain.

The network has suffered several interruptions in the recent past. Solana claims the interruptions were an outcome of resource exhaustion. Whether these outages are mere roadblocks in Solana’s journey to solve the inherent problem of scalability or a cause of significant concern remains to be seen.

Cosmos

Built to facilitate communication between standalone distributed ledgers sans intermediaries, Cosmos is dubbed the Internet of Blockchains.Cosmos identifies itself as an expansive ecosystem built on a set of modular, adaptable, and interchangeable tools. Cosmos in a nutshell Cosmos, through the utilization of Hubs, IBC, Tendermint Byzantine Fault Tolerance (BFT) Engine, and the Cosmos Software Development Kit (a framework for building application-specific blockchain), provides the necessary infrastructure for the simplified development of powerful and interoperable blockchains.

Cosmos provides an ecosystem that permits blockchains to seamlessly communicate through IBC and Peg Zones without compromising sovereignty. Cosmos addresses the notorious issue of scalability through its horizontal and vertical scalability solutions.

Cosmos places a high value on new blockchains handling large transaction volumes efficiently and quickly. It leverages two types of scalability –

1. Vertical stability – these encompass techniques for scaling the blockchain itself. Tendermint BFT possesses the capability to achieve thousands of transactions per second by moving away from the PoW system. However, in the case of these solutions, the outcome is largely dependent on the application itself, as Tendermint simply improvises on what’s available.

2. Horizontal scalability – these tend to focus on scaling out and addressing the limits of vertical scalability. The transactions per second cannot be enhanced beyond a certain point even if the consensus engine and applications are highly optimized. Thus, the solution entails a move to multichain architectures. The whole idea is to not be limited by the throughput of a single blockchain. Cosmos aims to have multiple parallel chains with no limit on the number of chains that can be connected to the network. This theoretically makes it infinitely scalable as more chains can be added when the existing ones reach their maximum TPS.

Other blockchains claiming infinite scalability with their novel technological solutions include Avalanche and ICP.

Avalanche

Developed by Ava Labs to address limitations of older blockchain platforms, Avalanche strives to deliver on high performance, extreme scaling capabilities, fast confirmation times, and affordability. It provides a platform for users to easily develop multi-functional blockchains and dApps. Its core value proposition is the deployment of a subnet architecture.

Subnets (short for subnetworks) are a dynamic set of validators tasked with achieving consensus on a certain set of blockchains. An innovative proposition to the trilemma solution, subnets offer a unique form of scaling. These can’t exactly be categorized as L2 solutions. Subnets offer more reliability, security, decentralization as well as flexibility in design and implementation. These are, in fact, similar and closely related to sharding. These create new but separate and connected instances of the same blockchain. However, the critical difference between the two is that subnets are algorithmically generated and can be created by users as and when required. Moreover, sharding is built-in, whereas subnets are limitless, implying infinite subnets can be launched. The possibilities with subnets are limitless as they can include virtual machines, multiple blockchains and can be deployed for different use cases.

Subnets on Avalanche

The Avalanche Network comprises three interoperable chains: X-Chain, P-Chain, and C-Chain. X-Chain is concerned with sending and receiving transfers, C-Chain is the platform’s Ethereum Virtual Machine (read smart contracts), and the P-Chain is for coordination of validators and the creation and management of subnets. P-Chain and C-Chain utilize the Snowman consensus to enable high-throughput secure smart contracts, and X-Chain utilizes the DAG-optimized Avalanche consensus, a scalable protocol that enables low latency and fast finality.

Validators on Avalanche are required to secure and validate all three chains that together form the Primary Network. This requirement essentially enables easy connectivity and implementation between the subnets.

Subnets and Scaling

Subnets on Avalanche enable individual projects to build and connect to the Mainnet via individual chains. This reduces the amount of space they take up on the Mainnet. Adopting the aforementioned strategy enables Avalanche to divert traffic and avoid network congestion addressing the pressing issue of high gas fees and low transaction speed as it scales up.

Broadly speaking, subnets contribute to scaling in multiple ways that include –

1. Competing ideas to co-exist for a single cryptocurrency. Avalanche states in case of incompatible sharing ideas, two different subnets with differing sharding schemes can be deployed. Alternatively, a subnet with two subchains can each try out a different sharding scheme (with validation assigned to a common validator).

2. Permit the creation of infinite subnets for differing use cases. A new subnet can be launched when a chain hits its scaling limit. There are no restrictions on the number of chains that can be added, facilitating the creation of application-specific blockchains with their own set of validators (Note – a validator can validate different subnets while validating the default subnet). Application-specific blockchains can be highly optimized, and because they’re siloed from one another, the network can deliver at low fees. There are no disadvantages to generating limitless subnets other than possible inconvenience. Theoretically, an entire crypto network (like Bitcoin or Ethereum) can be ported to Avalanche over a subnet.

Avalanche claims anyone can launch a subnet on the network, and because the creation of subnets has no bounds, the potential to scale up will always exist.

ICP is another blockchain built for scalability. Let’s look at a network that goes beyond achieving security, decentralization, and scalability and focuses on building the future of Web 3.0 – a decentralized internet and global computing system.

Internet Computer

Internet Computer (IC) is an infinitely scalable general-purpose blockchain that delivers a decentralized Internet by running smart contracts at web speed. It permits developers to install smart contracts and dApps directly on the blockchain. It can host decentralized financial platforms, enterprise systems, NFTs, web-based services, tokenized social media platforms, entirely on chain. IC can be referred to as a sovereign decentralized network sans cloud computing services to deliver web content.

DFINITY (founders of IC) decided to resolve the longstanding scalability issue by developing a network that claims to run at web speed with infinite scalability potential. The resulting effort was novel cryptography and computer science to build and launch the Internet Computer.

Hosted on node machines and operated by independent, geographically separated parties, the internet computer nodes run on ICP, a proprietary protocol (Internet Computer Protocol). None of the nodes on IC are hosted by cloud providers ensuring a tamper-proof and stable network. ICP is a secure cryptographic protocol that ensures the security of the smart contracts running on the blockchain. The Internet Computer comprises individual subnet blockchains that run parallel to each other and are connected using Chain Key cryptography. Subnets on IC do not employ PoS or PoW consensus mechanisms to process transactions but utilize Chain Key cryptography. This technology permits canisters (smart contracts on IC) on one subnet to seamlessly call canisters hosted on other subnets. DFINITY claims these canisters are smart contracts that scale. Moreover, Chain Key technology allows IC to finalize transactions that alter the state of smart contracts in one to two seconds by splitting up smart contract function execution into two types – update calls and query calls. Another notable feature is the network’s decentralized, permissionless governance system Network Nervous System (NNS) which runs on-chain. NNS is designed to scale the network capacity when required. It does so by spinning up new subnet blockchains.

IC derives its ability to infinitely scale by partitioning the network into subnet blockchains. Each subnet blockchain on IC can independently process update calls and query calls. Thus, the network, through the addition of more subnets, can be easily scaled. NNS permits the addition of unlimited subnets on the network. Subnet functionality seems to be a secure and popular choice to achieve scalability, bringing us to what is the difference between IC and Avalanche.

Internet Computer and Avalanche

While both platforms deploy subnets and claim infinite scalability, there are however a few key differences. ICP claims an advantage over Avalanche owing to its ability to host websites and social networks. ICP is designed to deliver a Decentralized World Computer and is currently the only blockchain in the category. Avalanche gives stiff competition to other L1s by supporting speeds up to 4,500 TPS. As per their website, future upgrades can escalate transactions to 20,000 tps sans sharding or L2 solutions. Another metric to measure speed and scalability is finality. IC has demonstrated its ability to process 11,500 TPS and both chains claim sub 2 second finality.

IC requires nodes to be pre-approved and complete full KYC coupled with the need to operate from designated data centers with highly customized specifications. Avalanche has modest hardware requirements to deliver on maximum decentralization. Performance is CPU-bound, and if the network dictates the need for higher performance, specialized subnets can be launched.

Closing Thoughts

Blockchain is slowly but progressively becoming the base layer offering varied use cases in diverse sectors. For it to serve billions of users across the globe, one can only imagine the kind of scalability solutions and infrastructure that would need to exist. In light of the growing demand, there is a place for all of these solutions to co-exist. However, the only ask will be constant evolution and that includes new ideas that haven’t been developed yet.